Cloudflare LLM deployment demo

Is CF a better choice than AWS/GCP?

DEMO. ANALYZE. DOCUMENT. REPEAT.

Goals of this Substack QS

This substack QS (quick start) describes (very briefly) the steps we (myself and Copilot) used to

(ch1) Deploy llama-3.1-8b-instruct to Cloudflare.

(ch3) Add a POST (for prompts) endpoint.

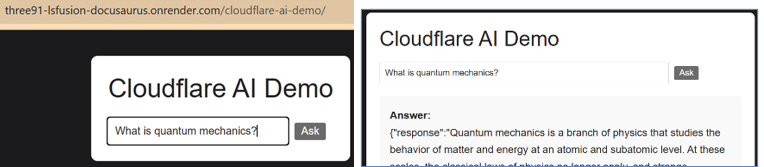

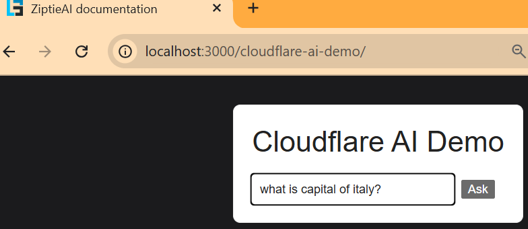

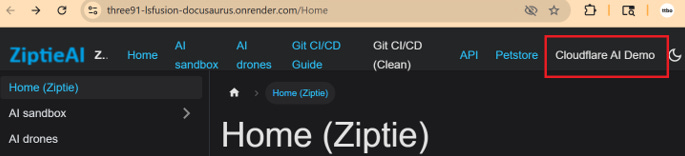

(ch4) Add prompt entry textbox on docs3.ziptieai.com.

I’m not sure about this, but it seems like (at least for my purposes) CloudFlare would be a great replacement for AWS/GCP (for my needs) and a great option to HuggingFace deployment (used in the previous Substack post for #431). For details see docx #432.

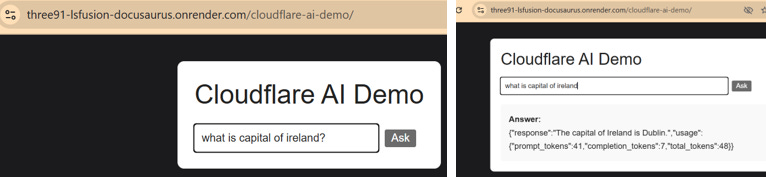

Final result at docs3.ziptieai.com:

TL;DR ?

No time to read it all? Then

To do the demo: Go to chapter “Prerequisites”.

Or read the last chapter 5 “Post-demo summary”.

TOC

Chapters in this post

Terminology

Concepts

Prerequisites

Ch 1 CF YT demo (problems)

Ch 3 CPLT add public POST endpoint for prompts

Ch 4 Add prompt entry textbox on docs3.ziptieai.com

Ch 5 Post-demo summary

Terminology

In this QS:

CPLT = Copilot (from Microsoft).

Co-pilot = generic term for products like CPLT (usually inside VS Code).

Concepts

C1 Wiki section 4.0b “Platforms and tools”

C3 Workflows

C1 Wiki section 4.0b “Platforms and tools”

Section 3 “Youtube demos”. I previously I focused on this Wiki section (doing Youtube demos, with the help of AI co-pilots). This was good for getting wide exposure the to the ecosystem.

Section 4 “GPT/CPLT demos”. In July 2025 the focus shifted to this section. These are “clean-slate” demos using only AI co-pilots (ChatGPT or MS Copilot) for assistance. This QS (#431) is the latest of these demos.

This demo is in subsection 4.0b.

C3 Workflows

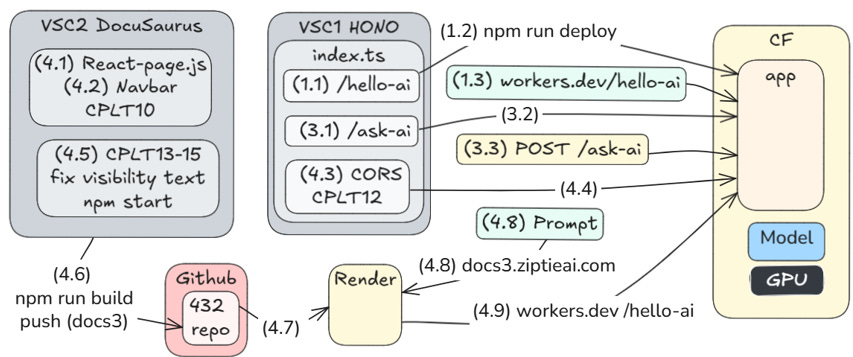

The workflows in the 3 chapters of this QS are shown in in the diagram below:

Ch 1 Create basic hello endpoint (YT video).

Ch 3 Create POST prompt endpoint that returns response.

Ch 4 Add to docs3.ziptieai.com website.

The following shows the final successful test result at docs3.ziptieai.com:

Prerequisites

CPLT17

(1) Microsoft Copilot (or other co-pilot)

You have access to my repo. But there are always errors when setting this up. Your chances for success are vastly better with a co-pilot to fix the errors.

Ch1 CloudFlare Youtube demo (problems)

Based on “Cloudflare Workers AI: Build a "Hello, World" AI App!” from Cloudflare Developers (there were errors in this video).

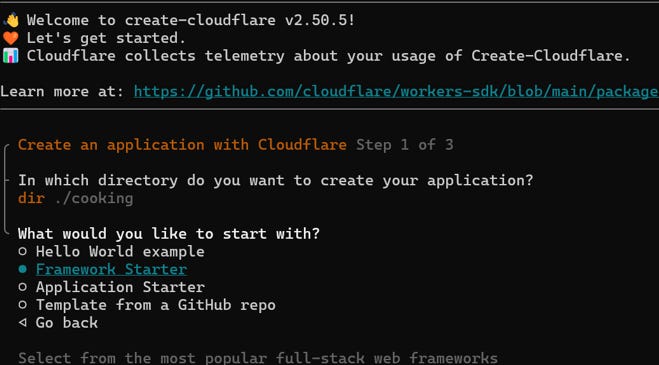

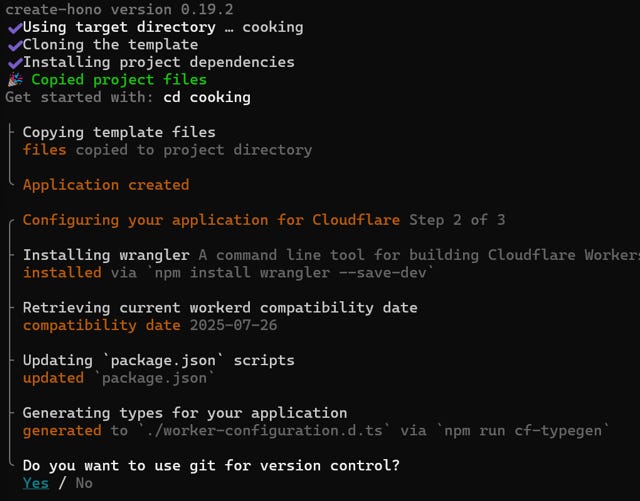

1.1 0:26 Create a new application (runs locally)

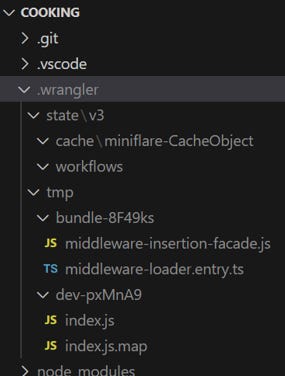

(1) powershell

(2) create dir /432_cloudflare

(3) cd 432_cloudflare

(4) npm create cloudflare@latest

(5) enter dir cooking

(6) choose Framework starter

(7) Select Dev framework Hono

(8) OK to proceed Y

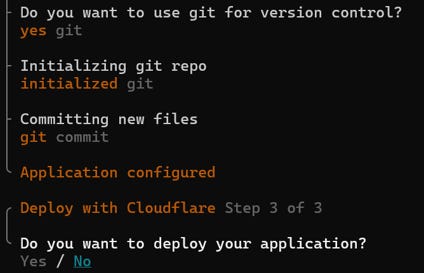

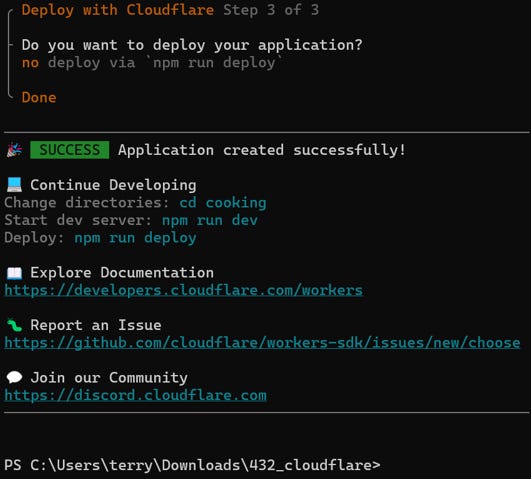

(9) Use Git for version control YES

(10) Deploy app NO

(11) cd cooking

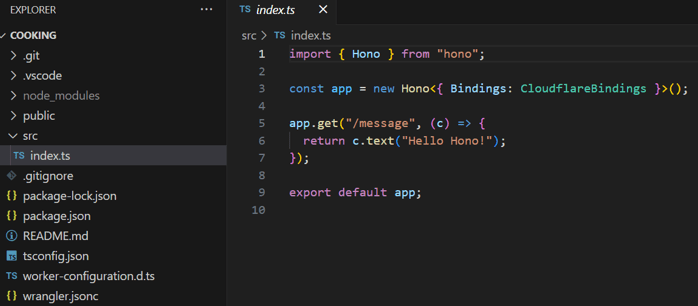

(12) code .

(13) In video he has ‘/’. That will fail. Use “/message.

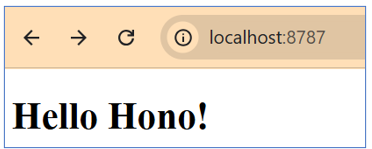

(14) npm run dev

(15) Press b to open new browser

1.2 2:28 Adding Workers AI to the project

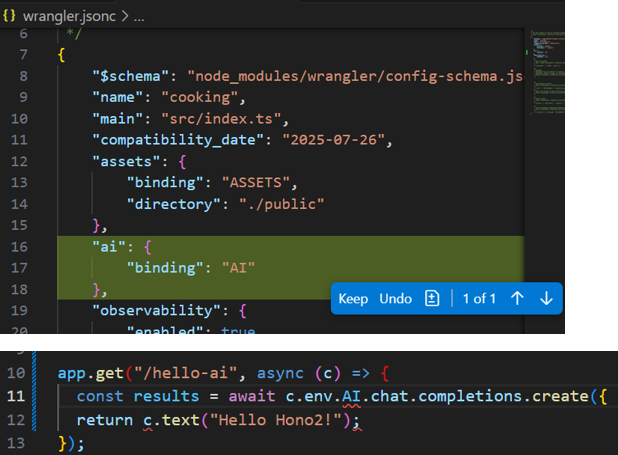

(16) CPLT2 fix error

You are using wrangler.jsonc (the modern JSONC format), not wrangler.toml. To enable the Cloudflare AI binding in wrangler.jsonc, add an ai binding under the top-level object like this:

"ai": {

"binding": "AI"

},

So, your config should look like this (add it after "assets" or "observability"):

{

// ...existing config...

"assets": {

"binding": "ASSETS",

"directory": "./public"

},

"ai": {

"binding": "AI"

},

"observability": {

"enabled": true

}

// ...rest of config...

}

This will enable the AI binding for your Worker.

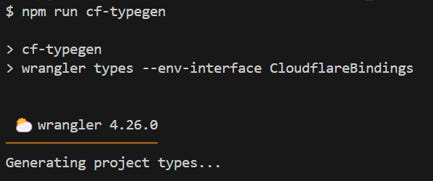

(17) npm run cf-typegen

(18) Add AI.ru(model) to // src/index.ts

import { Hono } from "hono";

const app = new Hono<{ Bindings: CloudflareBindings }>();

app.get("/message", (c) => {

return c.text("Hello Hono2!");

});

app.get("/hello-ai", async (c) => {

const results = await c.env.AI.run("@cf/meta/llama-3.1-8b-instruct", {messages: [{role: "user", content: "What is the capital of France?"}]});

return c.json(results);

});

export default app;

// export const fetch = app.fetch;

1.3 4:25 Deploying the application globally! (he does not bother in video to test)

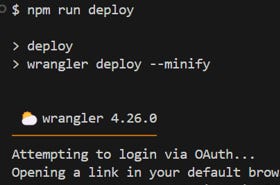

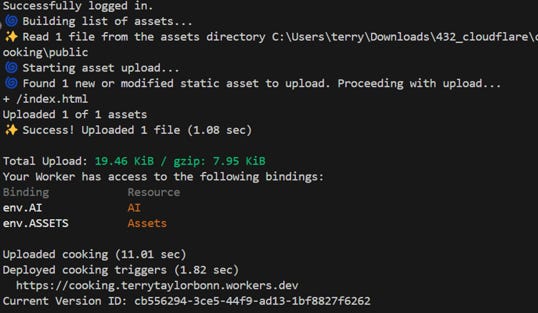

(19) npm run deploy

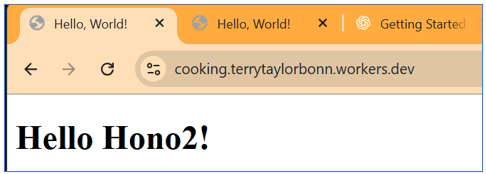

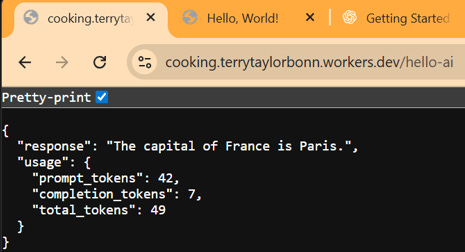

(20) https://cooking.terrytaylorbonn.workers.dev

(21) https://cooking.terrytaylorbonn.workers.dev/hello-ai

Ch 3 CPLT add public POST endpoint for prompts

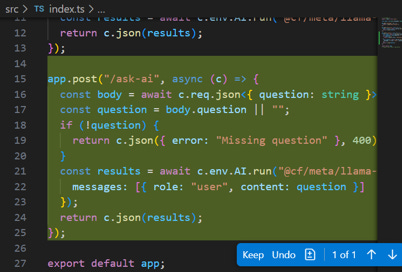

(22) CPLT7 add POST /ask-ai in the Hono app

A POST /ask-ai endpoint has been added. You can now send a JSON body like { "question": "Your question here" } to this endpoint, and it will return the AI model's response.

// src/index.ts

import { Hono } from "hono";

const app = new Hono<{ Bindings: CloudflareBindings }>();

app.get("/message", (c) => {

return c.text("Hello Hono2!");

});

app.get("/hello-ai", async (c) => {

const results = await c.env.AI.run("@cf/meta/llama-3.1-8b-instruct", {messages: [{role: "user", content: "What is the capital of France?"}]});

return c.json(results);

});

app.post("/ask-ai", async (c) => {

const body = await c.req.json<{ question: string }>();

const question = body.question || "";

if (!question) {

return c.json({ error: "Missing question" }, 400);

}

const results = await c.env.AI.run("@cf/meta/llama-3.1-8b-instruct", {

messages: [{ role: "user", content: question }]

});

return c.json(results);

});

export default app;

// export const fetch = app.fetch;

(23) npm run deploy

I did not document it, but I think you need to deploy at this point.

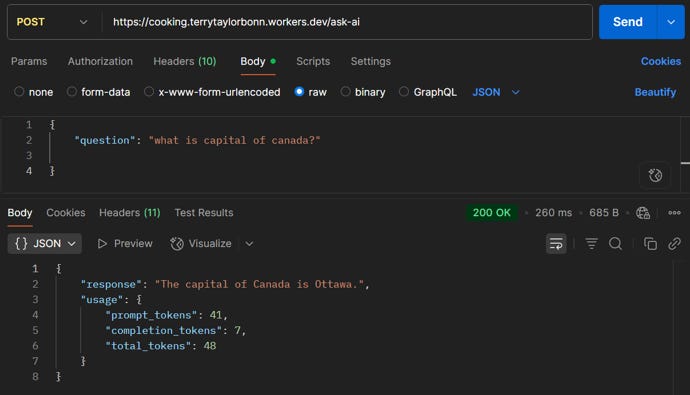

(24) Test Postman POST to https://cooking.terrytaylorbonn.workers.dev/ask-ai

Ch 4 Add prompt entry textbox on docs3.ziptieai.com

Notes:

This section does not provide all the info you need to duplicate the docs3.ziptieai.com website. I show what I did to update docs3. You would do something similar for your own site.

There were a lot of fixes in this section in the original docx. I distilled out what should be the correct final version of how to do things. But I would have to retest to verify. If you have problems, you might

Refer back to docx #432

Just take the final code from my github

4.1 Edit docusaurus site source

(25) Open a new VSC window for the Docusaurus project

CPLT8 Ready to update Docusaurus

Next, for the Docusaurus integration, here’s the plan:

Add a new React page or component to your Docusaurus site (e.g., “AI Chat”).

The page will have a form where users can type a question.

On submit, it will POST to your Worker’s /ask-ai endpoint and display the AI’s response.

(26) Create new branch br14 for #432

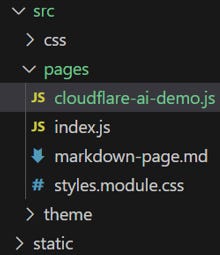

(27) Create src/pages/cloudflare-ai-demo.js

import React, { useState } from 'react';

export default function CloudflareAIDemo() {

const [question, setQuestion] = useState('');

const [answer, setAnswer] = useState('');

const [loading, setLoading] = useState(false);

async function handleAsk(e) {

e.preventDefault();

setLoading(true);

setAnswer('');

const endpoint = 'https://cooking.terrytaylorbonn.workers.dev/ask-ai';

try {

const res = await fetch(endpoint, {

method: 'POST',

headers: { 'Content-Type': 'application/json' },

body: JSON.stringify({ question }),

});

const data = await res.json();

setAnswer(data.answer || JSON.stringify(data));

} catch (err) {

setAnswer('Error: ' + err.message);

}

setLoading(false);

}

return (

<div

style={{

maxWidth: 600,

margin: '2rem auto',

padding: 20,

border: '1px solid #eee',

borderRadius: 8,

color: '#222',

background: '#fff',

}}

>

<h1>Cloudflare AI Demo</h1>

<form onSubmit={handleAsk}>

<input

type="text"

value={question}

onChange={e => setQuestion(e.target.value)}

placeholder="Ask a question..."

style={{ width: '80%', padding: 8, color: '#222', background: '#fff', border: '1px solid #ccc' }}

/>

<button type="submit" disabled={loading} style={{ marginLeft: 8 }}>

{loading ? 'Asking...' : 'Ask'}

</button>

</form>

{answer && (

<div style={{ marginTop: 20, background: '#f9f9f9', padding: 16, borderRadius: 4, color: '#222' }}>

<strong>Answer:</strong>

<div>{answer}</div>

</div>

)}

</div>

);

}

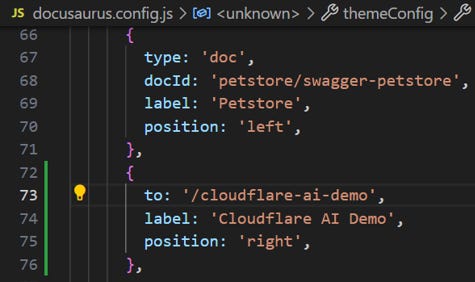

(28) Add the page to your Docusaurus navbar

Edit docusaurus.config.js and add to the navbar.items array:

{

to: '/cloudflare-ai-demo',

label: 'Cloudflare AI Demo',

position: 'right',

},

(29) CPLT11 CORS

This is a classic CORS (Cross-Origin Resource Sharing) issue. Your Docusaurus site (localhost:3000) is trying to call your Cloudflare Worker endpoint, but the Worker is not sending the required CORS headers.

How to fix: You need to update your Cloudflare Worker code to include the appropriate CORS headers in its response. Here’s a minimal example for a Worker that allows requests from any origin (for development):

Also handle OPTIONS preflight:

Summary:

Add Access-Control-Allow-Origin and related headers to all responses.

Handle OPTIONS requests for preflight.

After you update and redeploy your Worker, your Docusaurus site should be able to call it from localhost without CORS errors.

Let me know if you want a full Worker code sample or help with deployment!

// src/index.ts WITH CORS

import { Hono } from "hono";

const app = new Hono<{ Bindings: CloudflareBindings }>();

// CORS middleware

app.use('/ask-ai', async (c, next) => {

// Handle preflight

if (c.req.method === 'OPTIONS') {

return c.newResponse(null, 204, {

'Access-Control-Allow-Origin': '*',

'Access-Control-Allow-Methods': 'POST, OPTIONS',

'Access-Control-Allow-Headers': 'Content-Type',

});

}

await next();

// Add CORS headers to all responses

c.res.headers.set('Access-Control-Allow-Origin', '*');

c.res.headers.set('Access-Control-Allow-Methods', 'POST, OPTIONS');

c.res.headers.set('Access-Control-Allow-Headers', 'Content-Type');

});

app.get("/message", (c) => {

return c.text("Hello Hono2!");

});

app.get("/hello-ai", async (c) => {

const results = await c.env.AI.run("@cf/meta/llama-3.1-8b-instruct", {messages: [{role: "user", content: "What is the capital of France?"}]});

return c.json(results);

});

app.post("/ask-ai", async (c) => {

const body = await c.req.json<{ question: string }>();

const question = body.question || "";

if (!question) {

return c.json({ error: "Missing question" }, 400);

}

const results = await c.env.AI.run("@cf/meta/llama-3.1-8b-instruct", {

messages: [{ role: "user", content: question }]

});

return c.json(results);

});

export default app;

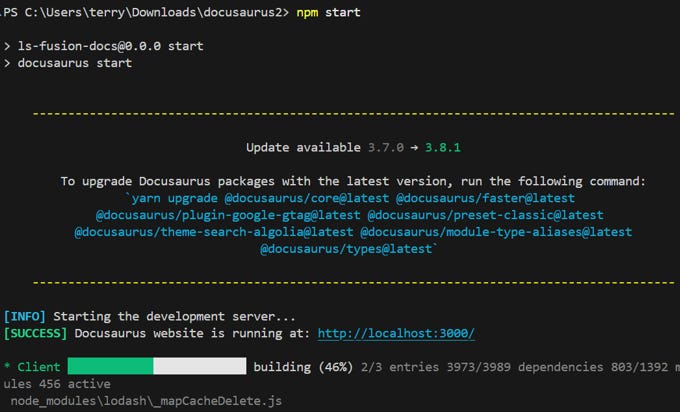

(30) CPLT15 npm start (test local)

npm run serve serves the static production build (from the build directory). It does NOT watch for file changes or hot-reload your edits.

This runs the Docusaurus development server, which hot-reloads your changes as you edit files.

Summary:

Use npm start for development and live preview of changes.

Use npm run build + npm run serve only to preview the final production build (not for development).

Switch to npm start, make your changes, and you should see your style updates immediately!

This will always show dark text on a white background, and will not throw the error.

Let me know if you want this change applied automatically!

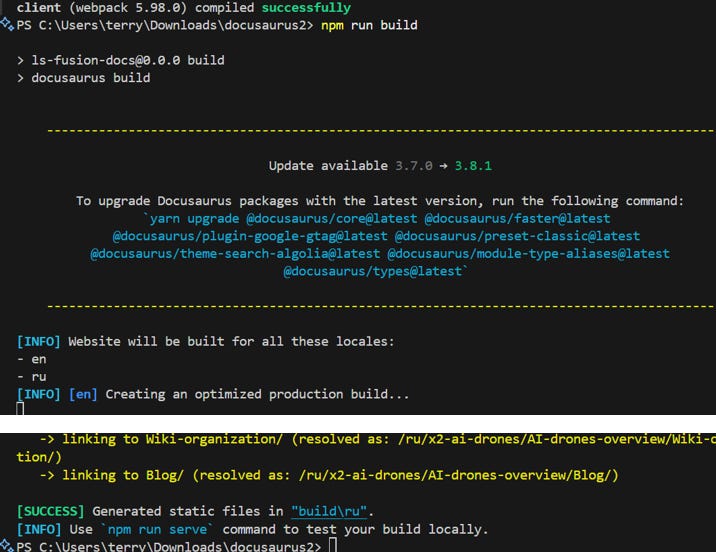

(31) npm run build

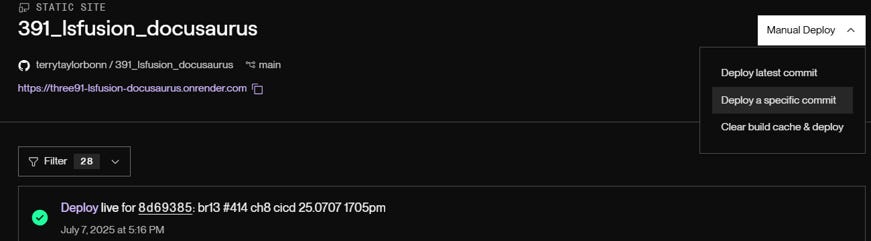

4.2 deploy to docs3.ziptieai.com

(32) push to br14 (created hour ago)

(33) deploy to render

(34) docs3.ziptieai.com

Ch 5 Post-demo summary

5.1 CF videos remind me why I created the AI LLM sandbox

When I first tried Cloudflare AI (for #432):

I tried a Youtube video (from CF). Outdated (8 months). Only thanks to Copilot (CPLT) could I quickly fix the problems. The video was really polised though, and fun to watch.

I wondered if anyone who commented on the video actually did the video.

At the least the video was better than the typical docs. The video did focus on showing what you needed to know to get started.

But the videos are not organized into a cohesive whole.

That’s a typical experience, and explains why I created the sandbox.

5.2 Will Cloudflare AI replace AWS/GCP AI?

Is this basically doing (or will do) the same as AWS/GCP but

No trick pricing

Model added very simply

No cost

DEMO. ANALYZE. DOCUMENT. REPEAT.