AI agent deployment demos (v3)

Demos of agents and tools for OpenAI on Render

This is another post that describes some hands-on AI (LLM) stack demo tests. This Substack post is not “perfect” (but it is more so that previous posts) … the diagrams and the text are rough, and the lab notes (MS Word docx files) are even more so (much of this LLM tech is new to me, so right now I am in the exploration phase; my previous docs contained conceptual errors, so it was not worth investing the time making the docs “perfect”). My dev team is only me, ChatGPT, and Copilot. We are trying to sift through all the hype around AI and select a few demos that work for us from which you can extract for yourself the core concepts.

TOC

This post covers

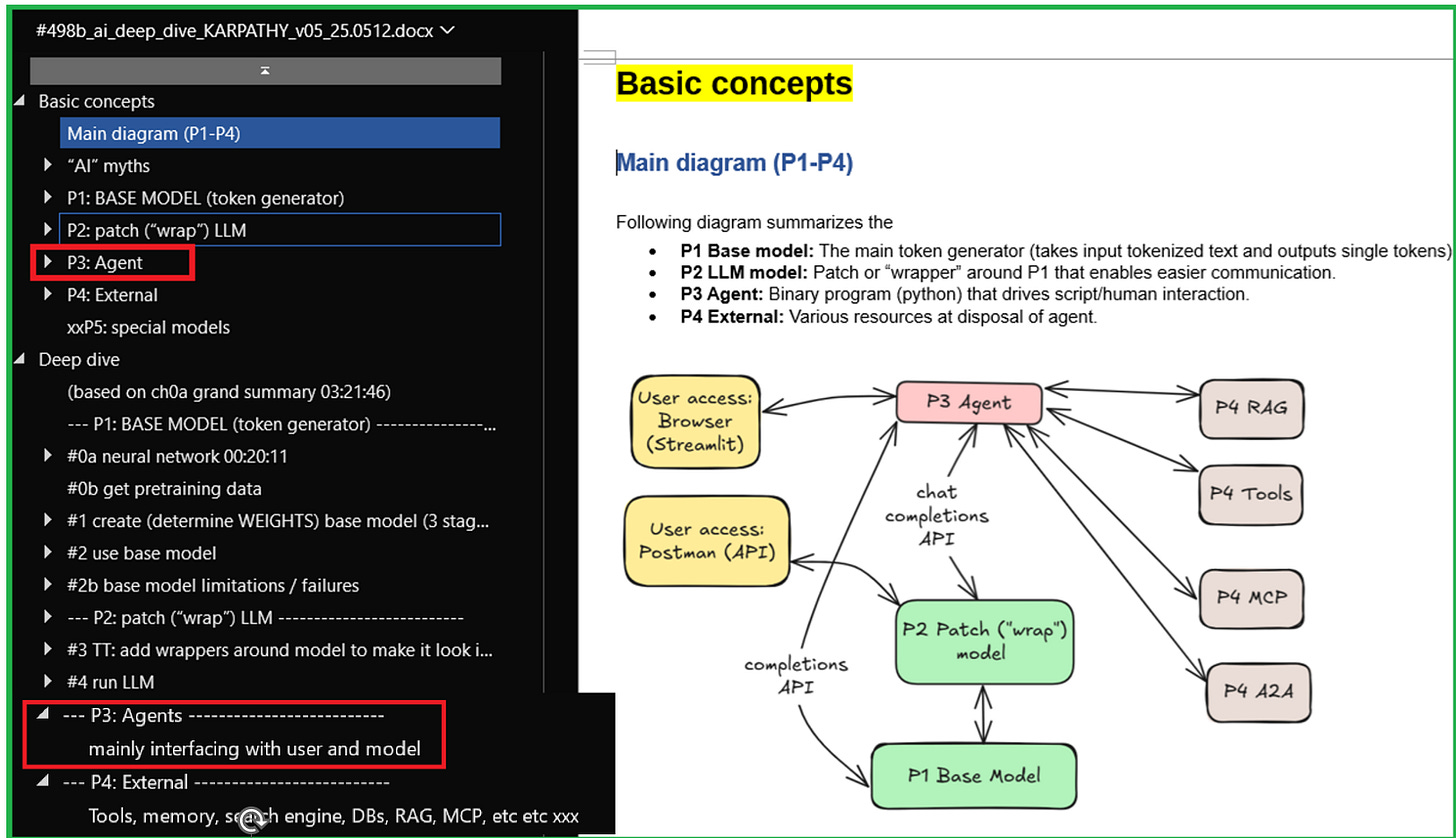

- Ch1 Docx #498b “AI stack deep dive”. A draft doc created from different sources that provides (WIP) the “big picture” overview of core concepts for those aspects of LLMs that are my focus.

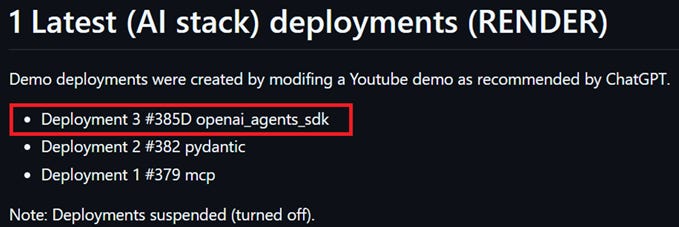

- Ch2 New wiki page: AI stack deployments. Since my last post on Substack, I’ve made 3 Render deployments. For all 3 I started out with a Youtube demo (with a Colab notebook) and then used Copilot/GPT to modify (added FastAPI, etc) for deployment on Render.

- Ch3 Demo deployment 3: Agents + tools for OPENAI. An overview of a great Youtube demo that we (myself, Copilot, and GPT) deployed to Render.

- Ch4 Future agent demos. An IBM video classifies agents into 5 types. I might focus on creating demos for each type in the future.

Ch1 AI stack “deep dive” doc

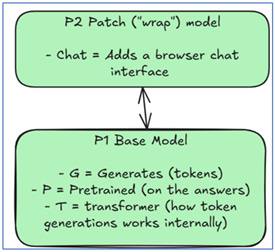

Docx #498b_ai_deep_dive_KARPATHY_ is my draft AI stack “deep dive” (and/or “conceptual guide”). In #498b I combine info from several sources into one well organized (WIP) discussion.

For now most content is about models (P1 and P2). The content has been taken from other docs.

For the past week the focus has been on content for

P3 agents (with APIs to the model from Pydantic and OpenAI) and

P4 external tools (that the agent use).

For P3/P4 the industry literature can be a bit confusing and overwhelming. I’ve decided to

Focus on PydanticAI and OpenAI APIs.

Get conceptual info by doing demos hands-on, focusing on explaining concrete examples.

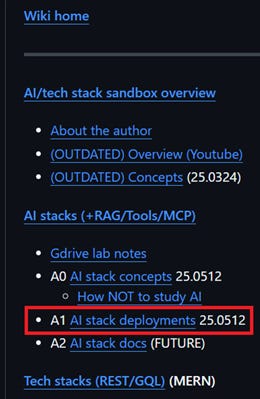

Ch2 New wiki page: AI stack deployments

I will list all deployments (to Render usually) on this wiki page.

github.com/terrytaylorbonn/auxdrone/wiki/A1-AI-stack-deployments

Ch3 Demo deployment 3: Agents + tools for OPENAI

3.1 Basic agent (DEPLOYED)

3.2 Websearch tool (McDs menu) (TO DEPLOY)

3.3 Orchestrator / multi-agent (TO DEPLOY)

3.4 Chat memory (TO DEPLOY)

3.1 Basic agent (DEPLOYED)

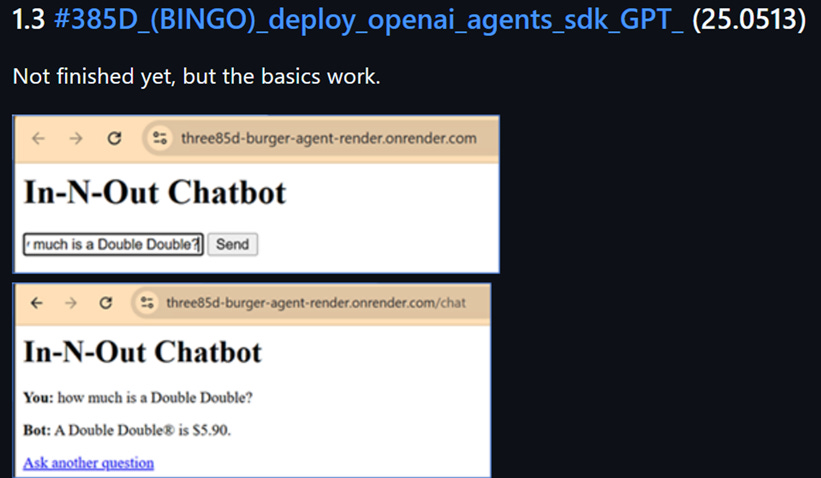

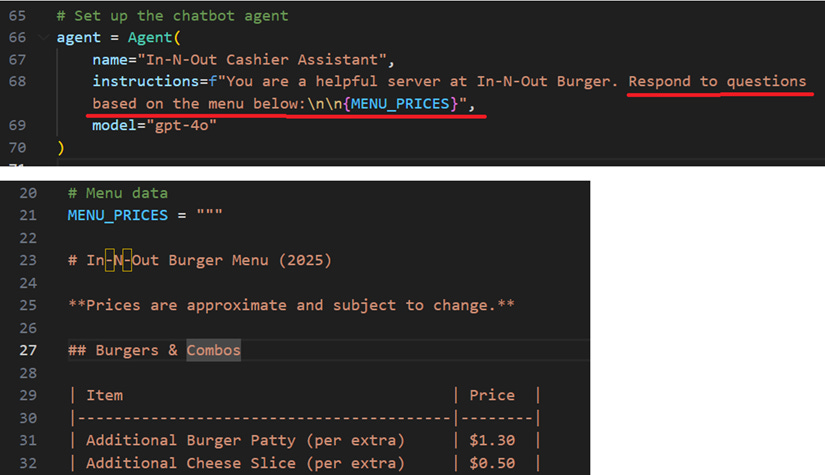

For details search in docx #385D_(BINGO)_deploy_openai_agents_sdk_GPT for “Part 1 Basic app”.

Simple agent.

Enter a request in the browser for items from the burger menu.

The AI agent consults the menu and returns the answer. The agent does not just repeat the menu, but rather gets the core info and prices and presents the answer.

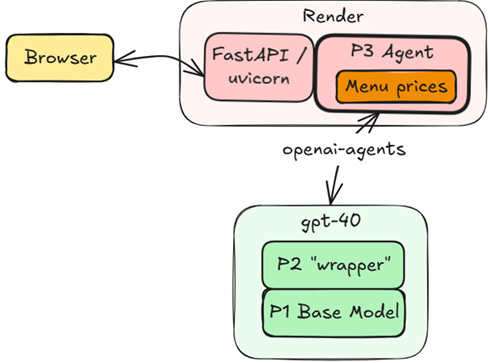

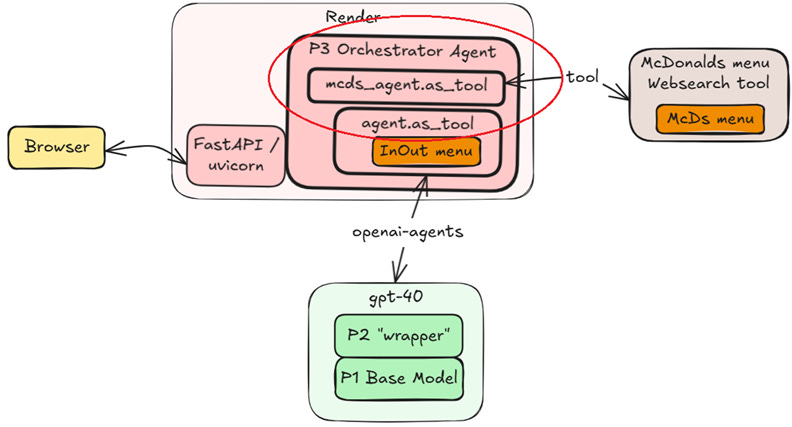

System diagram:

Agent runs on Render.

Accesses a gpt-40 model via the openai-agents API.

User interface is the browser.

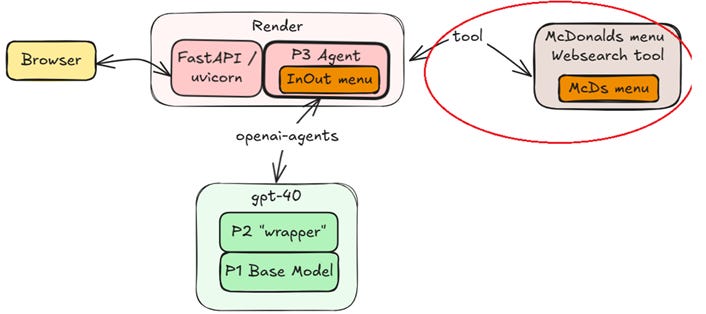

3.2 Websearch tool (McDs menu) (TO DEPLOY)

For details search in docx #385D_(BINGO)_deploy_openai_agents_sdk_GPT_ for “08:20 Websearch Tool”.

I still need to modify the code and deploy.

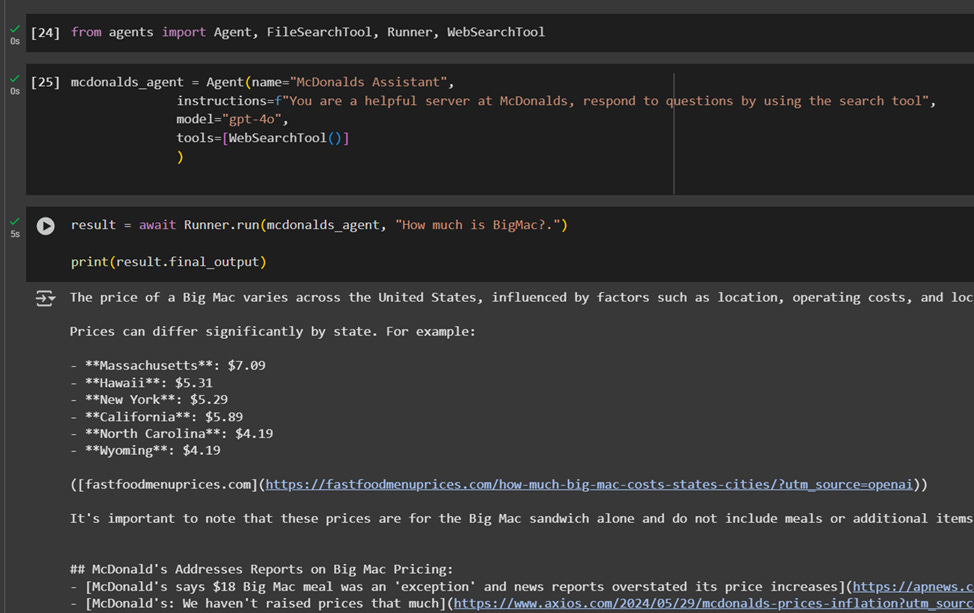

Screenshot below shows the agent running locally. Note the following:

Agent found the McDs web source itself.

Agent sifts through the McDs menu and creates a response.

3.3 Orchestrator / multi-agent (TO DEPLOY)

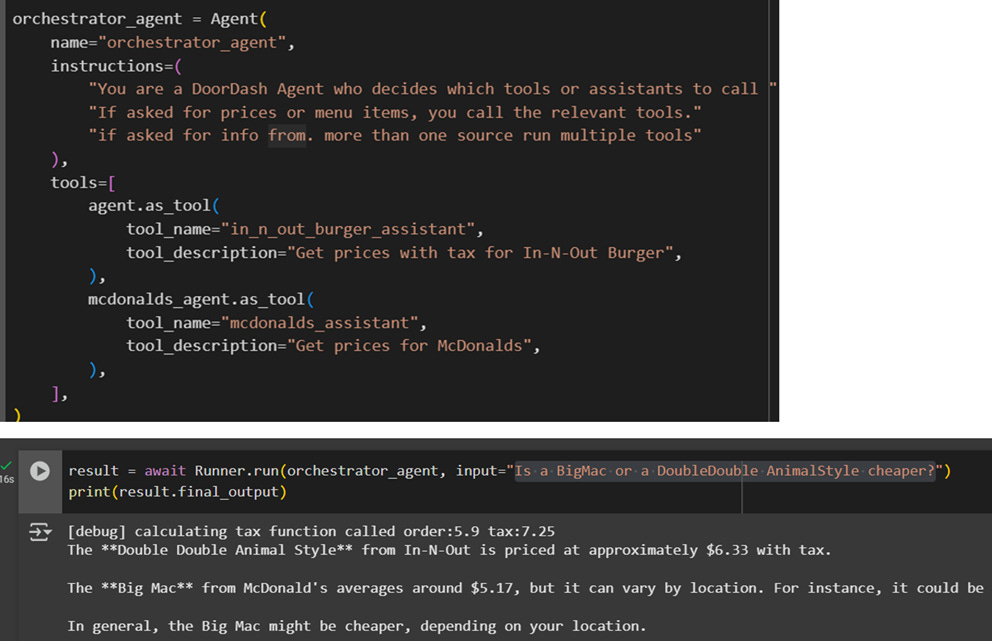

For details search in docx #385D_(BINGO)_deploy_openai_agents_sdk_GPT_ for “09:45 Agents as Tools”.

I still need to modify the code and deploy.

Screenshot below shows the agent running locally.

Note that the orchestrator agent used the other 2 agents to answer a prompt about items on 2 separate menus.

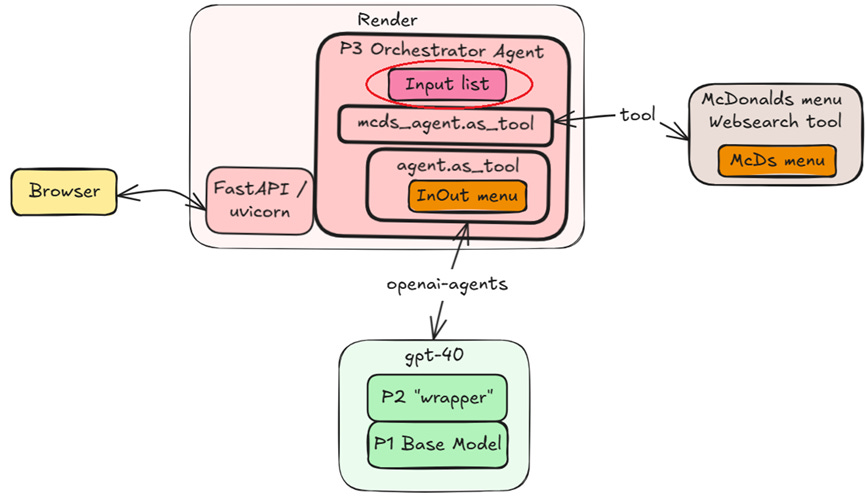

3.4 Chat memory (TO DEPLOY)

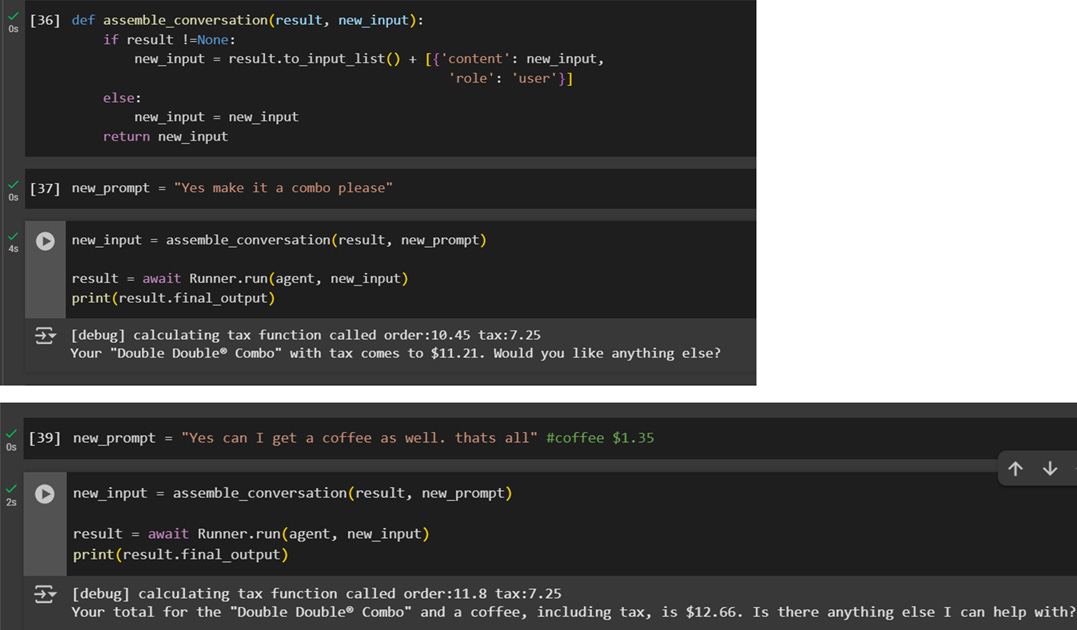

For details search in docx #385D_(BINGO)_deploy_openai_agents_sdk_GPT_ for “12:21 Giving it a Chat Memory”.

I still need to modify code and deploy.

The screenshot below shows the agent running locally.

The agent now remembers what was discussed in previous prompts (chat style memory).

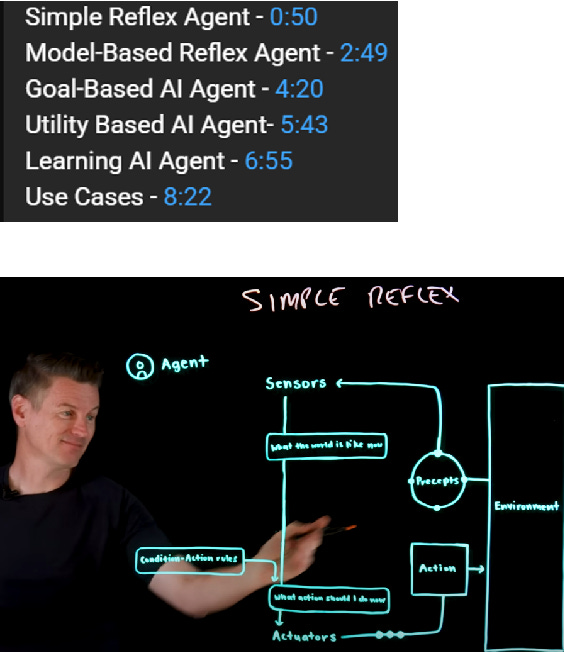

Ch4 Future agent demos

An IBM Youtube video summarizes the 5 types of agents.