DEMO. ANALYZE. DOCUMENT. REPEAT.

For the past couple of weeks I’ve been working on the draft version (#438c_gpt3_decoded_BOOK_.docx) of a book that will

decode the tech details of

how GPT-3 uses GPUs.

This is the key to understanding how GPT-3 “intelligence” (token probabilities) is computed by binary GPU hardware.

Book chapters “Step 1” to “Step 10” are the core of the doc.

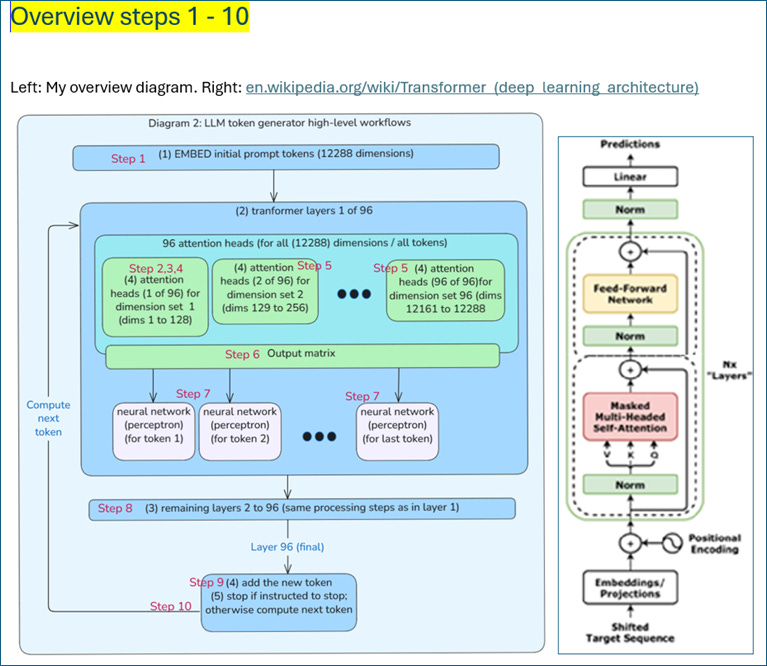

The most challenging (and original) part of this document was determining the structure and content (WIP) of these chapters. Chapter “Overview steps 1 - 10" shows how these chapters cover the overall GPU computational workflows using my own diagram (below left) a diagram from Wikipedia (below right; I still need to add the steps to this diagram).

I will keep working with GPT-5 (my co-pilot on this project) until I have all of the tech details correct (this will take some time). I will gradually polish the formatting and graphics. This is a long term project.

This book has only been possible without human assistance thanks to the

Assistance of GPT-5, whose very accurate and detailed technical descriptions have made it possible for me to reach the needed level of detail to understand the basics of how GPT-3 uses GPUs (GPT-5 discussions are referenced throughout this book).

3Blue1Brown Youtube videos on Neural Networks (referenced in this book).

DEMO. ANALYZE. DOCUMENT. REPEAT.